Simple embodied neural model

Simple embodied neural model that accompanies the pre-print paper:

"Balanced activation in a simple embodied neural simulation"

by Peter J. Hellyer, Claudia Clopath, Angie A. Kehagia, Federico E. Turkheimer, Robert Leech

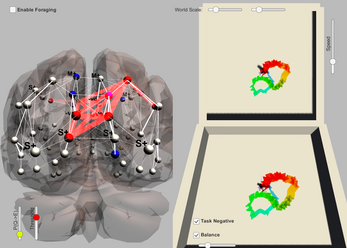

The game takes a simple model of the brain (often used to model spontaneous brain activity) and lets it control a simulated agent wandering around a simulated environment. This setup generates interesting brain-environment feedback that shows why you need neural mechanisms to balance activity. One balancing mechanism is inhibitory plasticity (where activity is dampened down within each brain region) another balancing mechanism is task negative (or default) brain activity (activity that is on when you aren't doing anything, and turns off when you start doing something).

There is a github repository with the model and the source code https://github.com/c3nl-neuraldynamics/Avatar/releases.

To play the game, you just tweak the model parameters or turn on/off either of the two balancing mechanisms, and observe how the agent moves. (For example, if you turn off both of the balancing mechanisms, you can watch how the agent ends up. Or you can see what happens with only one, or both mechanisms on.)

You can change the overall level of activity in the model (bizarrely named P(Q->E); how much activity propagates (Threshold); the duration of each epoch of the model ("Speed") (we suggest having this as fast (low) as possible, since the inhibitory plasticity can initially take a while); the type of activity balancing ("Task Negative" and/or "Local Balance"); and below "Local Balance", the target activity rate the inhibitory plasticity should aim for; finally, you can adjust the size/shape of the environment ("World Scale)"; and finally, you can make the agent forage spheres (pacman style) to generate a hi-score.

You can also use the arrow keys (direction) and space bar (forward thrust) to navigate around the brain.

| Status | Released |

| Platforms | HTML5 |

| Author | leechbrain |

| Made with | Unity |